(You can listen to this blog post too!)

Meter accuracy discussion sometimes not very accurate at all

In this month’s edition of our Fundamentals of Metering series, we look at a word that is often misused, misunderstood, and misrepresented: accuracy. Electric meters are required to adhere to an accuracy rating, and as such, field test equipment that is used to verify this rating also has an accuracy rating. What do we mean by “accuracy” though? We hear the term frequently, in statements such as:

- That is an accurate analysis.

- The archer’s accuracy is unnerving.

- The product’s accuracy is 1%.

Definition of Accuracy

There are a few variations of the way the term can be used. As such, there are different definitions depending on the manner in which it is used. Merriam-Webster dictionary defines accuracy as the following:

- Freedom from mistake or error

- Conformity to truth or to a standard or model

- Degree of conformity of a measure to a standard or a true value

These three definitions of the word are all similar, yet still very different. Let’s try using the word “accuracy” in a sentence that relates to the above definitions.

“His work was always accurate.”

In this case we could also say: “His work was free from mistake or error.”

That definition seems to fit our purpose when we’re describing a meter testing job, doesn’t it?

Well, not really.

The meter’s accuracy rating refers to its ability to conform to a standard, model, or true value.

When dealing with meters and test equipment, we are actually looking for a different definition. When we test a meter with a stated accuracy of 0.2%, that doesn’t refer to a test that is free from mistake or error. Instead, the meter’s accuracy rating refers to its ability to conform to a standard, model, or true value. In every scenario conceivable to the manufacturer or outlined in a testing standard such as ANSI C12.1, the meter will report its measurements within 0.2% of the actual value it sees.

So, let’s back up and attempt to clarify. A new sentence using the word accuracy could be written as “The meter’s stated accuracy is 0.2%.” But you could also read this sentence as “The meter’s measurement will agree with a reference standard within 0.2%.”

It’s still a little vague, because the new word “standard” is now being used. Don’t worry because next month we’re going to dive into the definition of “standard.” For now, all we need to know is that a standard is an artifact or calculation that bears a defined relationship to a fundamental unit of measurement, such as length or mass – or Watts of electric power.

When quantifying the “reliability” of some measured value, we “refer” to the standard to see how closely we agree. The point of having a reference standard is that if the measurement differs from the standard, then the device under test is “wrong” and needs to be corrected or replaced.

Definition of Precision

Another point of confusion arises when the words “precise” and “precision” are interchanged with the term “accuracy”.

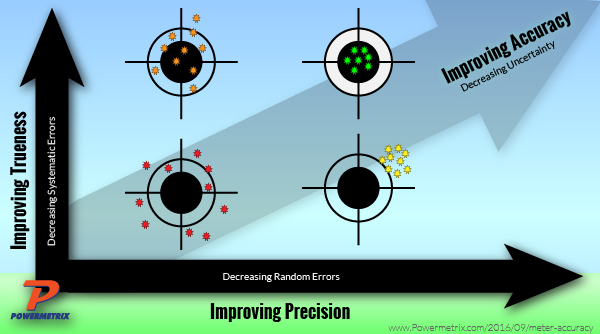

To demonstrate this concept, think about how a device can be:

- Accurate, but not precise.

- Precise, but not accurate.

- Neither precise nor accurate.

- Both precise and accurate.

Ideally, option #4 is the preferred method in metering applications. We want the test to be accurate and precise. As mentioned above, the accuracy is the closeness of the measurement to a standard. The precision of a device is how closely it agrees with itself over multiple measurements. The following diagram demonstrates this relationship.

The upper right target, with the dots in the bulls-eye, represents the perfect testing scenario. If a device under test conditions is not accurate, its measurements will not match reality – they may be far from the bulls-eye. If it is not precise, the measurements will vary and not be repeatable – they will not cluster consistently.

To be useful, a test kit must be both accurate and precise. The results need to be repeatable and within the stated uncertainty of the device. This is one reason calibration and maintenance of test equipment is extremely important. Over time, measurements may drift. This can be due to damage, age, or just being exposed to varying testing conditions. When a portable reference standard is calibrated and adjusted, it is compared to a reference standard under lab conditions and then fine-tuned until it is once again accurate and precise.

Testing

When performing a meter test in the field, using equipment such as the PowerMaster 3 Series with an internal reference standard is preferred. For auditing and legal purposes, the accuracy of the field standard should be “traceable” to a recognized metrology lab such as the National Institute of Standards and Technology (NIST). This means that any lab test equipment used to verify the field standard has itself been verified against other traceable equipment with each comparison in the sequence of comparisons being certified as having a guaranteed maximum error.

So, we have both a meter and test equipment with stated accuracies. How do we determine which is correct? The ISO 17025 lab testing standard specifies that to verify a device with a given stated accuracy, the reference device must have a stated accuracy of at least four times the rating of the device under test. For a 0.2% meter (typical rating) the test equipment accuracy must be no worse than 0.05%. Currently there is no testing standard that addresses the field testing of meters, so ANSI C12.1 is used as a guide.

When performing a field test, how do we determine if the meter is truly accurate? Do we pull the meter and place it in a test board? Or do we test the meter in the socket with a voltage and/or a current source? Both methods can verify the meter’s accuracy; however, only testing the meter under customer load will tell you if the meter is accurate under true billing conditions.

Accuracy and measurement terms can be confusing at times, but the key points to remember are:

- Accuracy is measured relative to a reference standard.

- Accuracy is how closely the measurement agrees with the standard.

- Precision is the repeatability (consistency) of multiple measurements.

- Calibration is crucial to maintaining the accuracy and precision of test equipment.

When considering a new item purchase, do not be afraid to ask questions. If your questions are not answered to your satisfaction, ask for outside assistance. Here at Powermetrix, we are always happy and willing to assist our customers.

Got questions, comments or stories? Share them below!

When the accuracy of an instrument is stated as ±2 units, does it mean that all measured values when compared with the reference value, the differences which I name it error e, shall 100% fall within the range -2≤ e ≤ +2 units?

Hi Emech. If you are calculating your reference standard’s error as “e”, then, yes, it should fall within 100% of the range.